FLUF Test: A Framework for Critical Evaluation of Content Generated with Artificial Intelligence © 2023 by Dr. Jennifer L. Parker is licensed under CC BY-NC-SA 4.0.

What is the FLUF Test?

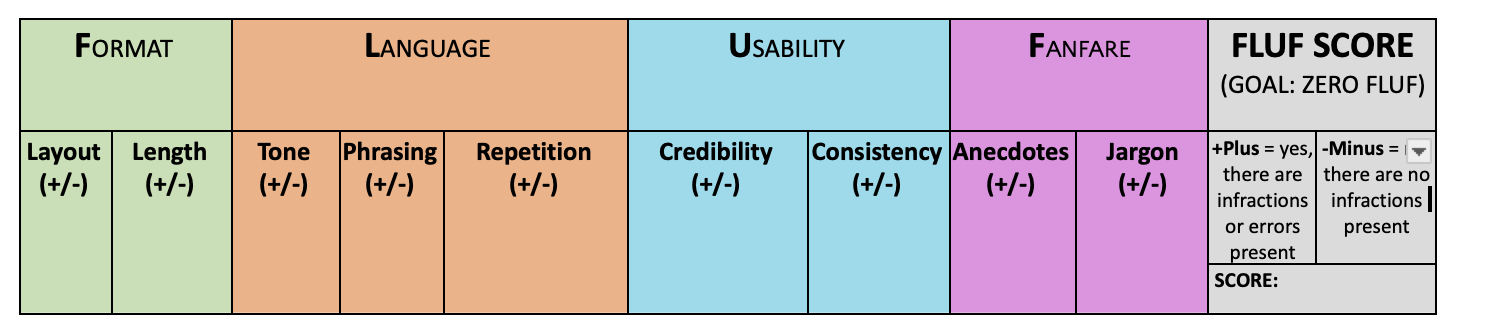

The FLUF Test is a framework for critically evaluating content generated through artificial intelligence. The framework encourages the user to look critically at format, language, usability, and fanfare when considering the outputs of the AI generated results. AI generated results can be flowery or inaccurate – or repetitive for example. Using AI tools brings with it a responsibility to be educated about their power, usefulness, and shortcomings.

Traditionally, online search results have been critiqued by frameworks like SIFT (Caulfield, 2019); CARRDSS (Valenza, 2004); CRAAP (Blakeslee, 2004); and 5 Key Questions (Thoman & Jolls, 2003). However, these models fail to take into consideration AI prompt generation and re-prompting. Prior to the FLUF Test (Parker, 2023), little guidance existed to critique AI generative results or improve prompts. In 2023, I developed the FLUF Test, based on many years as a teacher of information and digital media literacy skills across PK20 environments.

With the FLUF test, you are looking specifically at format, language, usability and fanfare and the indicators for each. The goal is to have a result that has zero FLUF, or zero infractions in the generated results. The FLUF test uses a simple rubric of plus (+) or minus (-), which translates to a zero and one. Each issue or infraction found with an AI generative result is assessed a “plus” or receives a scores of one. The total infractions are tallied to get the FLUF score. When a score results, the user is encouraged to re-prompt, regenerate, and repeat until the AI generative result scores a zero.

Traditionally, online search results have been critiqued by frameworks like SIFT (Caulfield, 2019); CARRDSS (Valenza, 2004); CRAAP (Blakeslee, 2004); and 5 Key Questions (Thoman & Jolls, 2003). However, these models fail to take into consideration AI prompt generation and re-prompting. Prior to the FLUF Test (Parker, 2023), little guidance existed to critique AI generative results or improve prompts. In 2023, I developed the FLUF Test, based on many years as a teacher of information and digital media literacy skills across PK20 environments.

With the FLUF test, you are looking specifically at format, language, usability and fanfare and the indicators for each. The goal is to have a result that has zero FLUF, or zero infractions in the generated results. The FLUF test uses a simple rubric of plus (+) or minus (-), which translates to a zero and one. Each issue or infraction found with an AI generative result is assessed a “plus” or receives a scores of one. The total infractions are tallied to get the FLUF score. When a score results, the user is encouraged to re-prompt, regenerate, and repeat until the AI generative result scores a zero.

Introduction to FLUF

|

The template guides your journey as you use the FLUF indicators to guide prompt writing, critically evaluating results, and repeating the process for zero FLUF. Explore the framework (pages 1-12) or check out the sample scenario of the FLUF Test in Action (pages 13-21). Watch this video to learn how it works. |

Overview

|

What Is FLUF?

FLUF comes in many shapes and forms and can be applied to a variety of AI results including images or text/LLMs.

In this example, we see two infractions - an issue with the Format length, and with the Usability credibility. The FLUF score is two (2).

Note that for each error there is a plus or a point assessed as an infraction.

Any score other than zero means re-prompting, regenerating, and repeating until you get the intended result.

In this example, we see two infractions - an issue with the Format length, and with the Usability credibility. The FLUF score is two (2).

Note that for each error there is a plus or a point assessed as an infraction.

Any score other than zero means re-prompting, regenerating, and repeating until you get the intended result.

FLUF Look For's

Format Look For's

The “F” in FLUF stands for Format, where the user analyzes Layout or Length of the AI generative results. To get “zero FLUF” the Layout and Length should be appropriate. Issues might include an essay when the desired result is an outline, or MLA citations when APA format is desired.

Language Look For's

The ”L” in FLUF stands for Language, assess the Tone, Phrasing, and Repetition. When examining Tone, consider whether the writing is the appropriate style. Phrasing should be clear without awkward presentation of information. Finally, consider whether there is Repetition to fill the word count or space. For a “zero FLUF” score in the Language section, AI generative results use the appropriate tone, present clear ideas, and share information succinctly.

Usability Look For's

The “U” in FLUF is for Usability and assesses Credibility and Consistency. When analyzing the AI generative results check that information is valid and reliable, appropriately cited, and from a reliable source. AI tools often present “hallucinations” by portraying false information as true. “Zero FLUF” in Usability means the AI generative result comes from documented and credible sources, information can be verified as true, and information is consistent with findings on the topic from other sources.

Fanfare Look For's

The last “F” in FLUF is for Fanfare, we are looking to appropriately address an audience using Anecdotes or Jargon. Assess the AI generative results for the appropriate mix of technical vocabulary and information. For example, academic writing should be free from anecdotes and jargon. Legal terms are appropriate for an audience of lawyers, whereas medical jargon would fit nicely into a request from a doctor. “Zero FLUF” means appropriate use of terms and vocabulary without cliches. Here, you are looking for the writing to present information in an informative way and specific way, in the style you intend.

Any infractions (pluses) indicate the need to regenerate or re-prompt the results with the AI generator. Starting with a good prompt will eliminate infractions and help get to "Zero FLUF".

The “F” in FLUF stands for Format, where the user analyzes Layout or Length of the AI generative results. To get “zero FLUF” the Layout and Length should be appropriate. Issues might include an essay when the desired result is an outline, or MLA citations when APA format is desired.

Language Look For's

The ”L” in FLUF stands for Language, assess the Tone, Phrasing, and Repetition. When examining Tone, consider whether the writing is the appropriate style. Phrasing should be clear without awkward presentation of information. Finally, consider whether there is Repetition to fill the word count or space. For a “zero FLUF” score in the Language section, AI generative results use the appropriate tone, present clear ideas, and share information succinctly.

Usability Look For's

The “U” in FLUF is for Usability and assesses Credibility and Consistency. When analyzing the AI generative results check that information is valid and reliable, appropriately cited, and from a reliable source. AI tools often present “hallucinations” by portraying false information as true. “Zero FLUF” in Usability means the AI generative result comes from documented and credible sources, information can be verified as true, and information is consistent with findings on the topic from other sources.

Fanfare Look For's

The last “F” in FLUF is for Fanfare, we are looking to appropriately address an audience using Anecdotes or Jargon. Assess the AI generative results for the appropriate mix of technical vocabulary and information. For example, academic writing should be free from anecdotes and jargon. Legal terms are appropriate for an audience of lawyers, whereas medical jargon would fit nicely into a request from a doctor. “Zero FLUF” means appropriate use of terms and vocabulary without cliches. Here, you are looking for the writing to present information in an informative way and specific way, in the style you intend.

Any infractions (pluses) indicate the need to regenerate or re-prompt the results with the AI generator. Starting with a good prompt will eliminate infractions and help get to "Zero FLUF".

Get Started: FLUF Your Prompts and AI Results

The FLUF test is presented to encourage users to expand their media and information literacy, and extend their use of critical evaluation of all information - no matter how it is obtained. For an overview of the rationale leading to the FLUF Test framework and a detailed walk through of the FLUF experience, view the presentation.

Explore the slide deck to check out the examples.

Explore the slide deck to check out the examples.

- Using FLUF to create better prompts

- FLUF Testing AI generative results

- Examples of FLUF Test infractions

Create an AI Prompt with FLUF

|

Use the 2-page template to create a prompt guided by the FLUF indicators for your AI tool, then use the FLUF Test to evaluate results.

| ||||||||

Explore the FLUF Experience (Prompt to Critical Evaluation)

How to Use the FLUF Test

- Review FLUF indicators – format, language, usability, fanfare

- Create a prompt using FLUF guidance

- Generate results and FLUF test

- Update prompt; regenerate; FLUF test

- Repeat until happy with results and zero FLUF

- Combine AI Results & Human Creativity and Critique to Generate a Final Product

| |||||||

Fixing FLUF with Re-Prompts

Got FLUF? Here are some examples of how you can re-prompt your AI tool to improve results.

|

FORMAT

Layout “Reformat the essay in APA 7th edition format” Add subheadings using MLA” “Add the in-text parenthetical references” Length “Reduce the essay length to 3-5 sentences” “Expand the essay length to 750 words |

LANGUAGE Tone “Use a more business-like tone” “Address the audience using a conversational tone” Phrasing “Rephrase the last sentence to make it less formal” “Rephrase the last paragraph to include key vocabulary terms” Repetition “Reduce repetition around students and expand essay to include teachers” |

USABILITY Consistency “Please add a step that includes [describe]” “Include a step where [describe]” “Remove the part where [describe]” Credibility “Remove all information that did not come from a peer reviewed article” “Only include information from a peer reviewed article” “Share the sources of the information” |

FANFARE Anecdotes “Remove the funny stories, magazine quotes, or cliches” “Rephrase the essay to make it more technical and fact based” “Add key vocabulary and rephrase to sound more formal and professional” Jargon “Remove the medical jargon and make it more conversational and descriptive” “Add in legal jargon and case examples to support the central idea” |

Authentic Assignments with AI: iSearch, CRAAP, FLUF and You!

|

Looking for an authentic learning experience that empowers students to critically evaluate online sources, AI generated content, and document their research journey?

Explore the Lesson Plan and Templates. This document includes the entire iSearch process:

|

First, students learn about iSearch. The iSearch protocol provides us with a different way of thinking about writing a paper (McCrorie, 1988). The topic, issue, or challenge are described, followed by what the student knows and wants to know. Resources for the paper come in a variety of forms, both print and non-print, and all sources must be critically evaluated for their usefulness. In addition, the use of AI to refine and polish the writing is expected and encouraged. The essay concludes by sharing the significance of the research experience, growth as a researcher, and work cited.

Second, students learn to critically evauate online resources with CRAAP (or another framework). Educators have long-targeted information literacy skills for students to critically evaluate online sources using protocols like SIFT (Caulfield, 2019); CARRDSS (Valenza, 2004); CRAAP (Blakeslee, 2004); and 5 Key Questions (Thoman & Jolls, 2003).

Third, students use AI in their search journey and critique using FLUF. Students must now expand their critical evaluation skills to include AI generated results. Guided by protocols for information literacy, as well as best practices in prompt generation, the FLUF Test has emerged as a primary tool. Focusing on format, language, usability, and fanfare, this framework encourages the AI user to create better prompts and critique AI generative results.

Finally, students write the iSearch paper. The iSearch presents itself as a real possibility for engaging students in the use of AI in the true essence of the 80/20 Rule. Students set about describing the process of gathering and critiquing online resources, prompting and critically evaluating AI generated results, and putting their own creativity on the final product for submission.

Second, students learn to critically evauate online resources with CRAAP (or another framework). Educators have long-targeted information literacy skills for students to critically evaluate online sources using protocols like SIFT (Caulfield, 2019); CARRDSS (Valenza, 2004); CRAAP (Blakeslee, 2004); and 5 Key Questions (Thoman & Jolls, 2003).

Third, students use AI in their search journey and critique using FLUF. Students must now expand their critical evaluation skills to include AI generated results. Guided by protocols for information literacy, as well as best practices in prompt generation, the FLUF Test has emerged as a primary tool. Focusing on format, language, usability, and fanfare, this framework encourages the AI user to create better prompts and critique AI generative results.

Finally, students write the iSearch paper. The iSearch presents itself as a real possibility for engaging students in the use of AI in the true essence of the 80/20 Rule. Students set about describing the process of gathering and critiquing online resources, prompting and critically evaluating AI generated results, and putting their own creativity on the final product for submission.

Underpinnings

Here are some of the foundational pieces for this work:

The 80/20 Rule (Pareto Principle, 1896)

The balance between human insight and technological capability

80% research and regeneration of online sources

20% human critique, creativity, and culmination to create a final output

Information Literacy: Frameworks for Critical Evaluation of Online Resources

CRAAP (Blakeslee, 2004) – currency, relevance, authority, accuracy, purpose

CARRDSS (Valenza, 2004) – credibility, accuracy, reliability, relevance, date, sources, scope

SIFT (Caulfield, 2019) – stop, investigate, find, trace

5 Key Questions (Thorman & Jolls, 2003) – creator, techniques, perceptions, bias, purpose

ISTE Standards for Educators (2017): Citizen Standard

When considering AI policies, mentoring students in ethical and appropriate use of digital resources falls under the guidelines of digital citizenship. According to the ISTE Educator Standard for “Citizen”, “Educators inspire students to positively contribute to and responsibly participate in the digital world.” (International Society for Technology in Education [ISTE], 2017).

2.2a. Create experiences for learners to make positive, socially responsible contributions and exhibit empathetic behavior online that build relationships and community.

Indicator 2.2a provides guidance on how students should be interacting and socially responsible in their use of AI

2.3b. Establish a learning culture that promotes curiosity and critical examination of online resources, and fosters digital literacy and media fluency.

In 2.3b, we see the essence of critical evaluation. Traditionally, information literacy skills included critically evaluating online sources using protocols like SIFT (Caulfield, 2019); CARRDSS (Valenza, 2004); CRAAP (Blakeslee, 2004); and 5 Key Questions (Thoman & Jolls, 2003). We extend this to include FLUF (Parker, 2023) for critical evaluation of AI.

2.3c. Mentor students in safe, legal and ethical practices with digital tools and the protection of intellectual rights and property.

Indicator 2.3c directs educators on mentoring of students on ethical practices while using digital tools like AI, and reminds us to cite our use of AI as well as adhere to copyright, intellectual property, and fair use guidelines.

2.3d. Model and promote management of personal data and digital identity, and protect student data privacy.

Finally, in 2.3d the emphasis is on data privacy, digital identity, and personal data. This indicator causes users to pause when uploading research, sensitive information, or personal data into an AI generative too

Critical Evaluation of AI: FLUF Test (Parker, 2023)

The FLUF test is presented to encourage users to expand their media and information literacy, and extend their use of critical evaluation of all information - no matter how it is obtained. For an overview of the rationale leading to the FLUF Test framework and a detailed walk through of the FLUF experience, view the presentation. For more information about the FLUF Test, templates, or a consultation, contact me.

FLUF Test: A Framework for Critical Evaluation of Content Generated with Artificial Intelligence © 2023 by Dr. Jennifer L. Parker is licensed under CC BY-NC-SA 4.0

The 80/20 Rule (Pareto Principle, 1896)

The balance between human insight and technological capability

80% research and regeneration of online sources

20% human critique, creativity, and culmination to create a final output

Information Literacy: Frameworks for Critical Evaluation of Online Resources

CRAAP (Blakeslee, 2004) – currency, relevance, authority, accuracy, purpose

CARRDSS (Valenza, 2004) – credibility, accuracy, reliability, relevance, date, sources, scope

SIFT (Caulfield, 2019) – stop, investigate, find, trace

5 Key Questions (Thorman & Jolls, 2003) – creator, techniques, perceptions, bias, purpose

ISTE Standards for Educators (2017): Citizen Standard

When considering AI policies, mentoring students in ethical and appropriate use of digital resources falls under the guidelines of digital citizenship. According to the ISTE Educator Standard for “Citizen”, “Educators inspire students to positively contribute to and responsibly participate in the digital world.” (International Society for Technology in Education [ISTE], 2017).

2.2a. Create experiences for learners to make positive, socially responsible contributions and exhibit empathetic behavior online that build relationships and community.

Indicator 2.2a provides guidance on how students should be interacting and socially responsible in their use of AI

2.3b. Establish a learning culture that promotes curiosity and critical examination of online resources, and fosters digital literacy and media fluency.

In 2.3b, we see the essence of critical evaluation. Traditionally, information literacy skills included critically evaluating online sources using protocols like SIFT (Caulfield, 2019); CARRDSS (Valenza, 2004); CRAAP (Blakeslee, 2004); and 5 Key Questions (Thoman & Jolls, 2003). We extend this to include FLUF (Parker, 2023) for critical evaluation of AI.

2.3c. Mentor students in safe, legal and ethical practices with digital tools and the protection of intellectual rights and property.

Indicator 2.3c directs educators on mentoring of students on ethical practices while using digital tools like AI, and reminds us to cite our use of AI as well as adhere to copyright, intellectual property, and fair use guidelines.

2.3d. Model and promote management of personal data and digital identity, and protect student data privacy.

Finally, in 2.3d the emphasis is on data privacy, digital identity, and personal data. This indicator causes users to pause when uploading research, sensitive information, or personal data into an AI generative too

Critical Evaluation of AI: FLUF Test (Parker, 2023)

The FLUF test is presented to encourage users to expand their media and information literacy, and extend their use of critical evaluation of all information - no matter how it is obtained. For an overview of the rationale leading to the FLUF Test framework and a detailed walk through of the FLUF experience, view the presentation. For more information about the FLUF Test, templates, or a consultation, contact me.

FLUF Test: A Framework for Critical Evaluation of Content Generated with Artificial Intelligence © 2023 by Dr. Jennifer L. Parker is licensed under CC BY-NC-SA 4.0